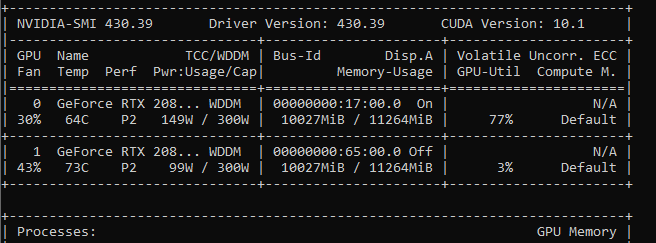

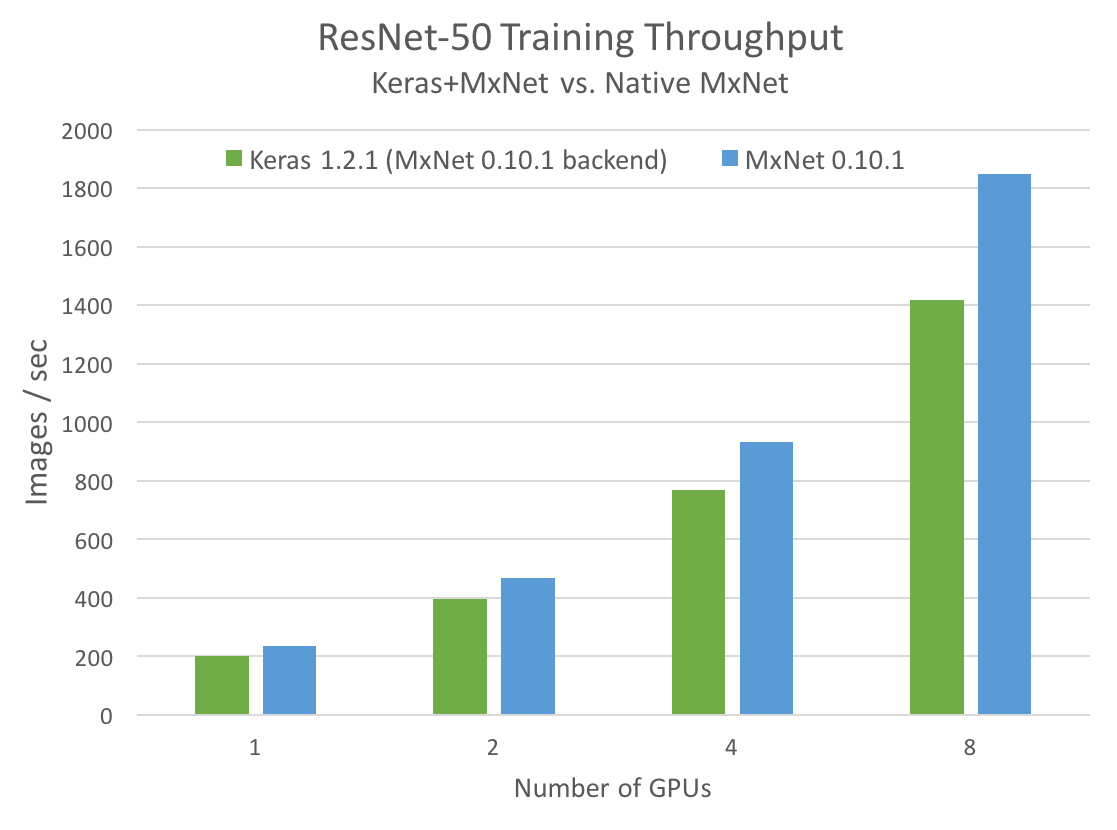

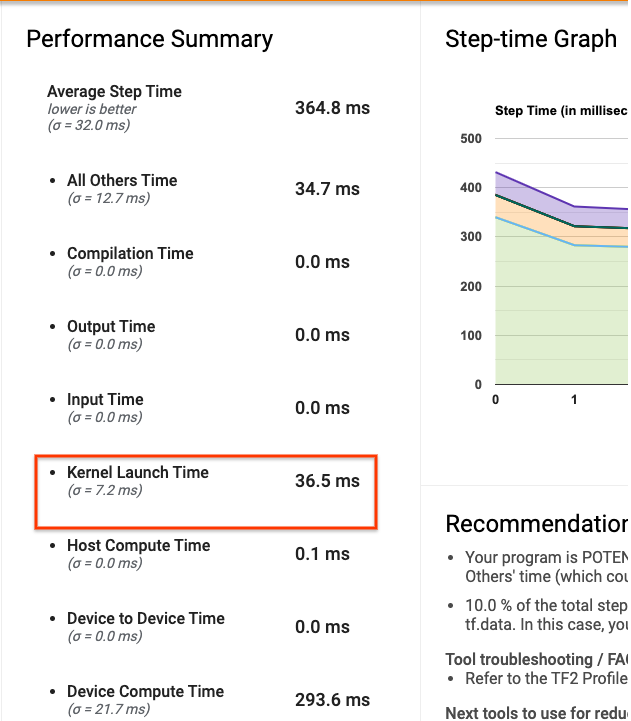

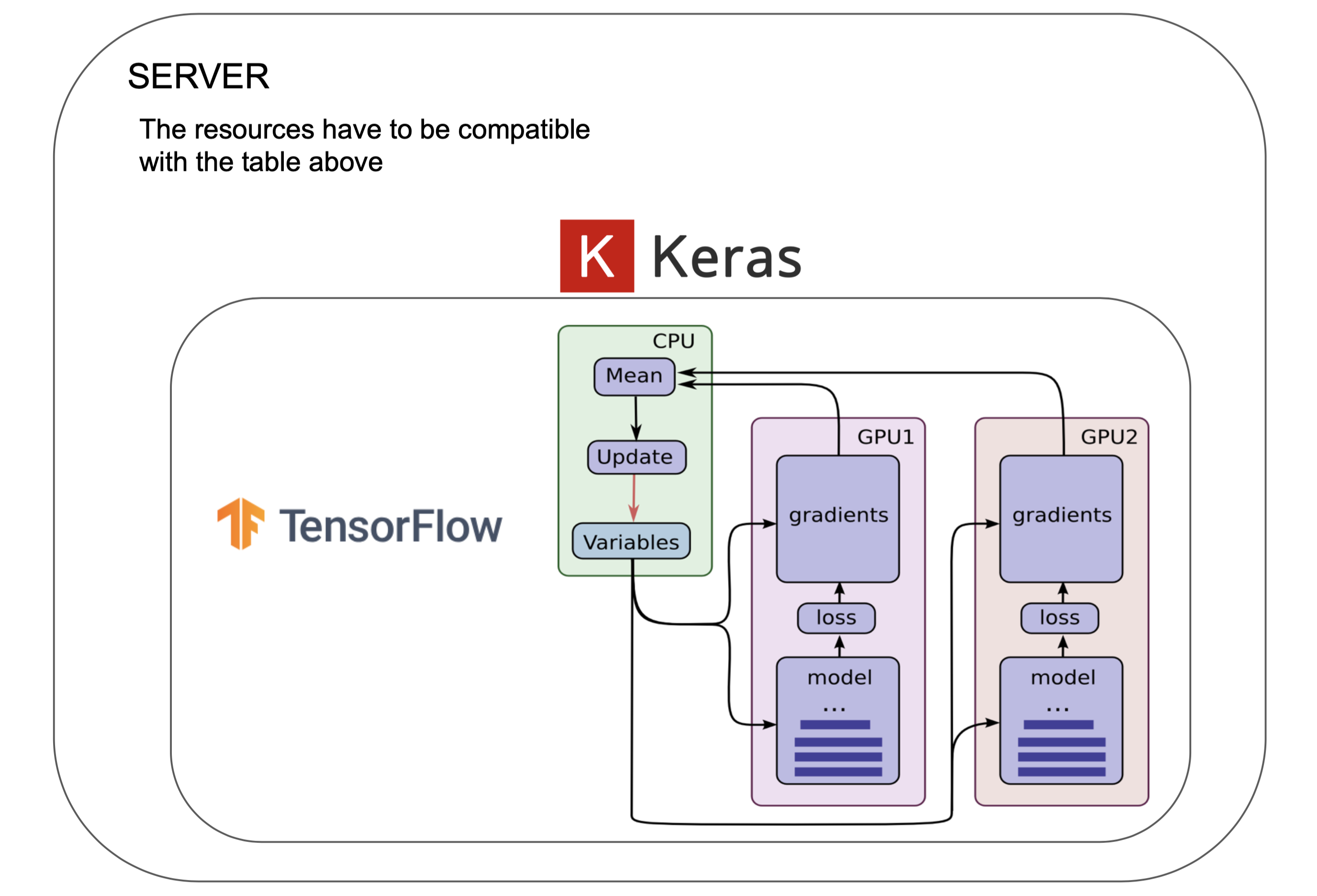

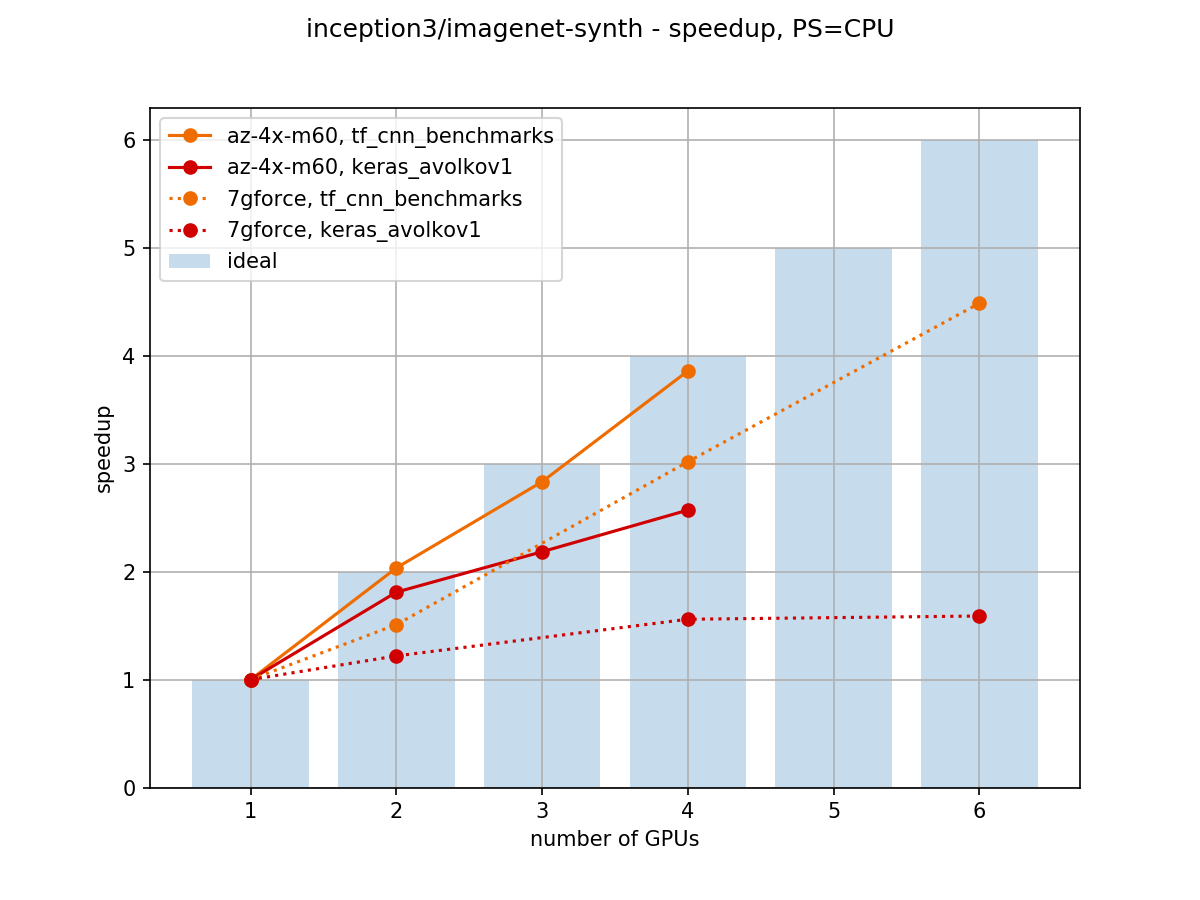

Towards Efficient Multi-GPU Training in Keras with TensorFlow | by Bohumír Zámečník | Rossum | Medium

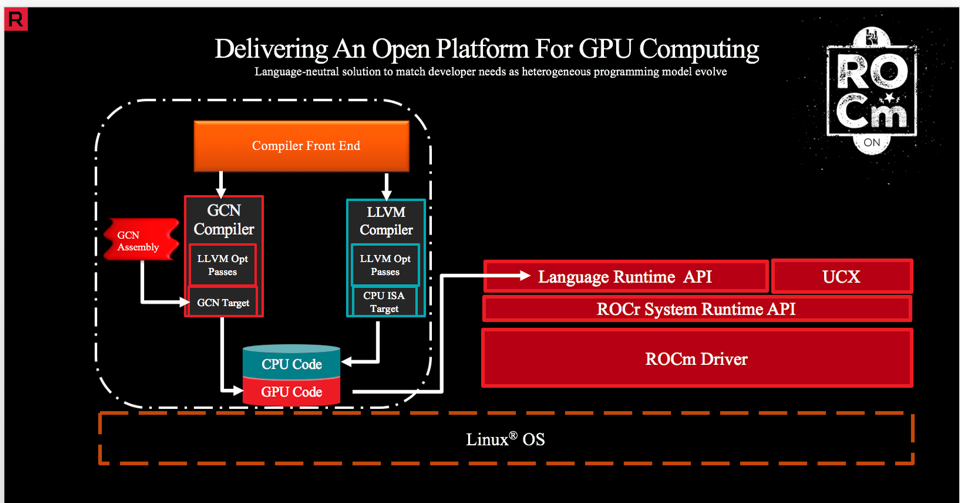

Use an AMD GPU for your Mac to accelerate Deeplearning in Keras | by Daniel Deutsch | Towards Data Science

Using the Python Keras multi_gpu_model with LSTM / GRU to predict Timeseries data - Data Science Stack Exchange

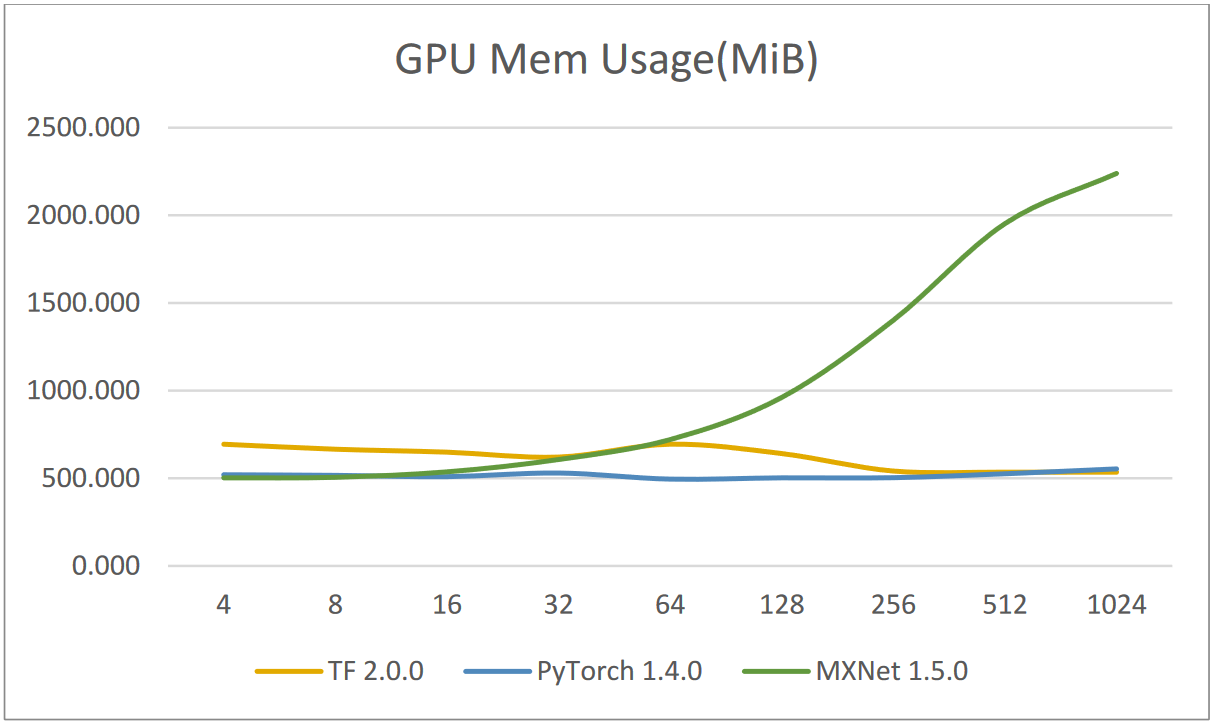

Interaction of Tensorflow and Keras with GPU, with the help of CUDA and... | Download Scientific Diagram